C:\Users\maz>%HADOOP_HOME%\bin\winutils.exe chmod 777 /tmp/Įither create a conda env for python 3.6, install pyspark=2.4.6 spark-nlp numpy and use Jupyter/python console, or in the same conda env you can go to spark bin for pyspark -packages :spark-nlp_2.11:2.5.5.C:\Users\maz>%HADOOP_HOME%\bin\winutils.exe chmod 777 /tmp/hive This video shows how we can install pyspark on windows and use it with jupyter notebook.pyspark is used for Data Science( Data Analytics ,Big data, Machine L.Cant thank you enough, made a little error by forgetting to add the - between bin. Install Microsoft Visual C++ 2010 Redistributed Package (圆4) Install Spark on Windows (PySpark) + Configure Jupyter Notebook.Set Paths for %HADOOP_HOME%\bin and %SPARK_HOME%\bin.Set the env for HADOOP_HOME to C:\hadoop and SPARK_HOME to C:\spark.Download Apache Spark 2.4.6 and extract it in C:\spark\.

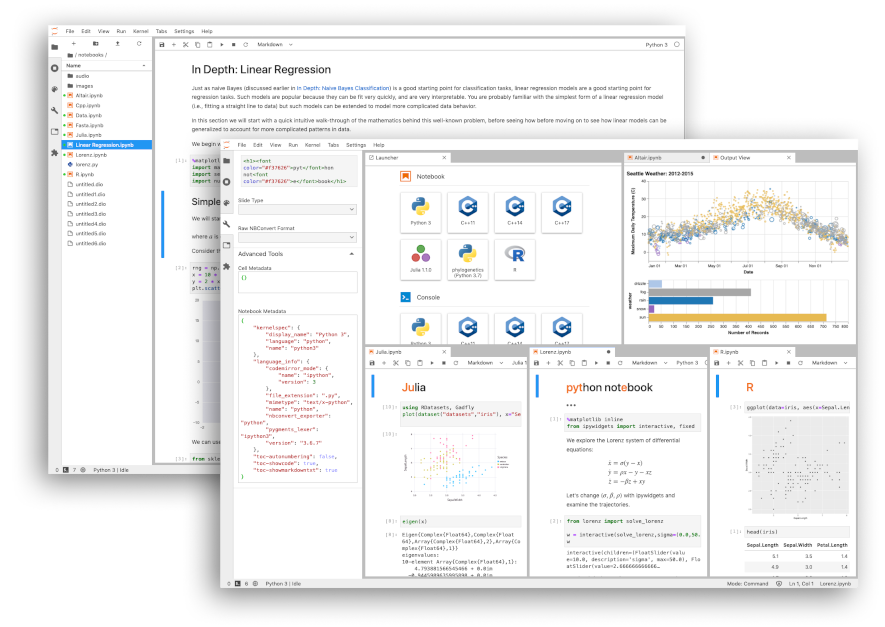

Install Java Make sure Java is installed. Enter the command pip install sparkmagic0.13.1 to install Spark magic for HDInsight clusters version 3.6 and 4.0. Spark provides an interface for programming entire clusters with implicit data parallelism and fault-tolerance. Steps to Installing PySpark for use with Jupyter This solution assumes Anaconda is already installed, an environment named test has already been created, and Jupyter has already been installed to it. Set back directory, follow below commands, cd cd spark-2.4.5-bin-hadoop2. Apache Spark is an open-source cluster-computing framework.

INSTALL SPARK ON WINDOWS WITH JUPYTER HOW TO

During installation after changing the path, select setting Path Now, let’s get to know how to access Jupyter Notebook from SPARK environment, before that needs to enable the access permission to use Jupyter Notebook through SPARK.Make sure you install it in the root C:\java Windows doesn't like space in the path.

0 kommentar(er)

0 kommentar(er)